Vision Sensors Technical Guide

- 1. Vision sensor

- 2. Image sensor

- 3. Pixel

- 4. Image processing (binalization)

- 5. Image processing 2 (gray scaling)

- 6. Image processing 3 (pattern searching)

- 7. Glossary for vision sensors

- 8. Vision sensor

- 9. System configuration of the vision sensor

- 10. Lens for vision sensors

- 11. Applicable series by application

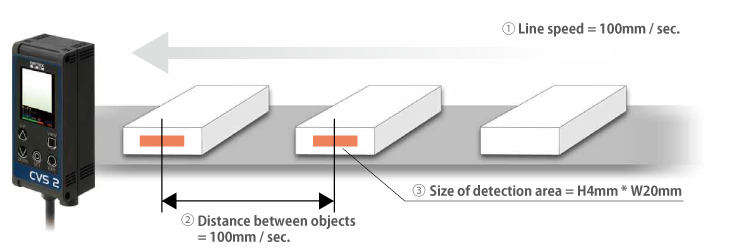

- 12. Calculation of shutter time

- 13. Example of objects that are hard to detect

1. Vision sensor

Vision sensor is utilized for detecting defective object, measurement size of object, character recognition,sorting objects, etc.

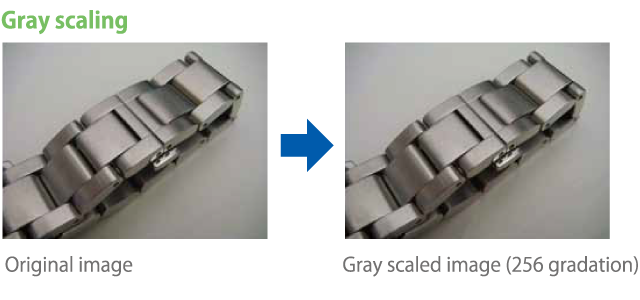

Vision sensor utilize image processing technologies like distinguishing "White or Black", "Bright or Dark","Deep color or Thin color", "Difference of the color", etc. detecting difference of these factors.

For example, to detect stain on the white object, the vision sensor checks black area in the field of view and detect it as the stain if the area exceeds threshold of the area.

2. Image sensor

-

A semi-conductor device, Image sensor, is built in the vision sensor. The image sensor consists of many small photo sensors spread over the chip that receive light through lens and convert the light into electrical signal.

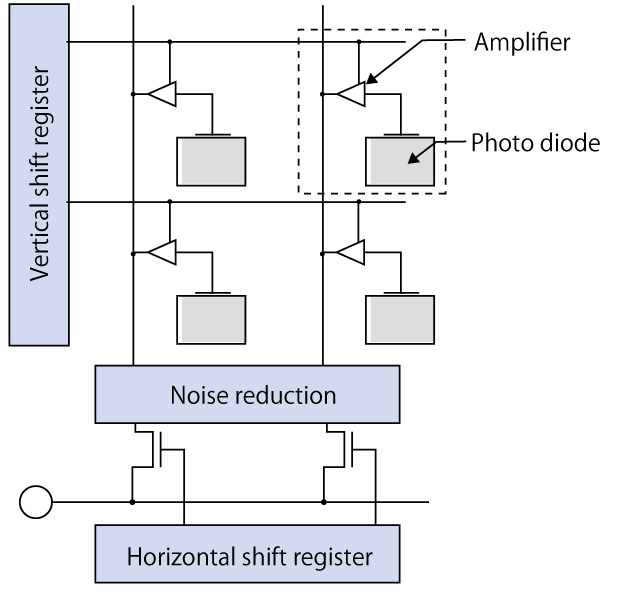

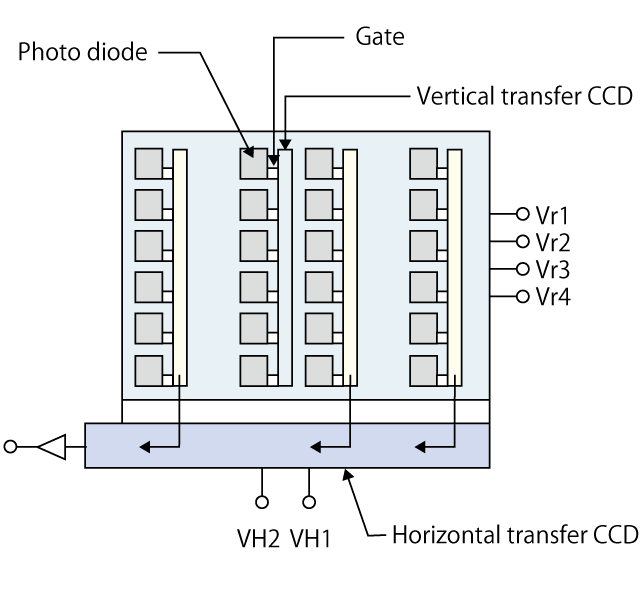

Generally, "CCD" and "C-MOS" image sensor are used for cameras.

Basic structure of those devices are shown as follows. -

-

C-MOS image sensor

-

CCD image sensor

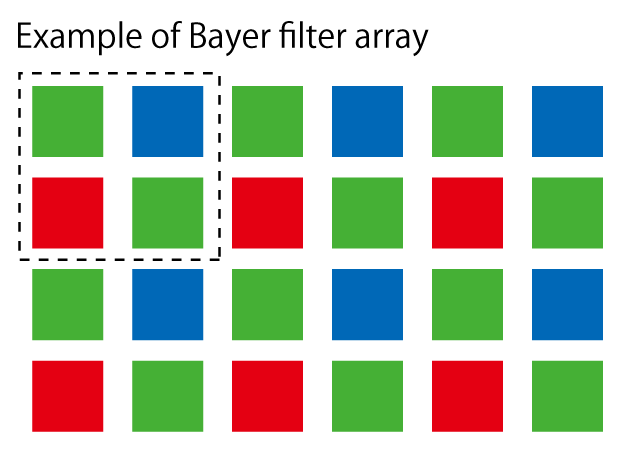

Color camera

-

The image sensor without color filters is monochrome image sensor and it provides gray-scale monochrome image data. To get color image data, color filters are needed for each pixel. Most of color image sensors employ "Bayer filter" invented by Mr. Bryce Bayer of Eastman Kodak.

The filter has RGB (Red, Green, Blue) area and its ratio is 50% Green,25% Red and 25% Blue. Human eyes are sensitive to green so this ratio provides efficient output for human eyes.* Four pixels are needed to reproduce colors for a pixel for color image sensor means that resolution of color image sensor is worse than monochrome image sensor.

-

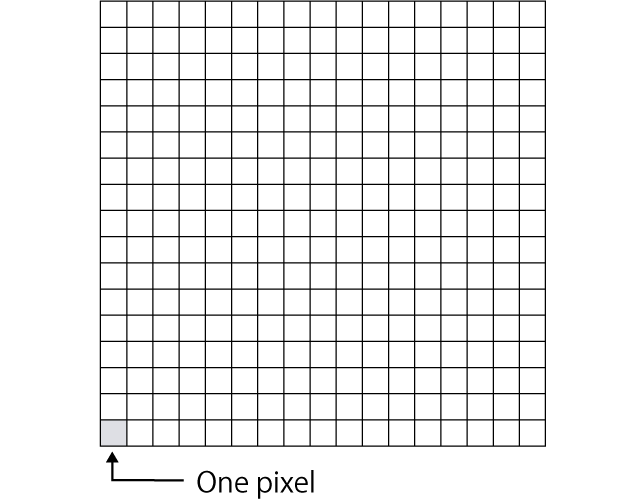

3. Pixel

-

Pixel is the minimum unit of image sensor. Pixels are spread in the area of the chip surface of the image sensor like the picture right hand side.

The more pixels, the higher resolution you will be able to get. On the other hand, process time will increase.

To shorten process time, you may have to use high speed CPU or DSP that may cause higher cost.*DSP = Digital Signal Processor. In this case, it will be used for image processing.

-

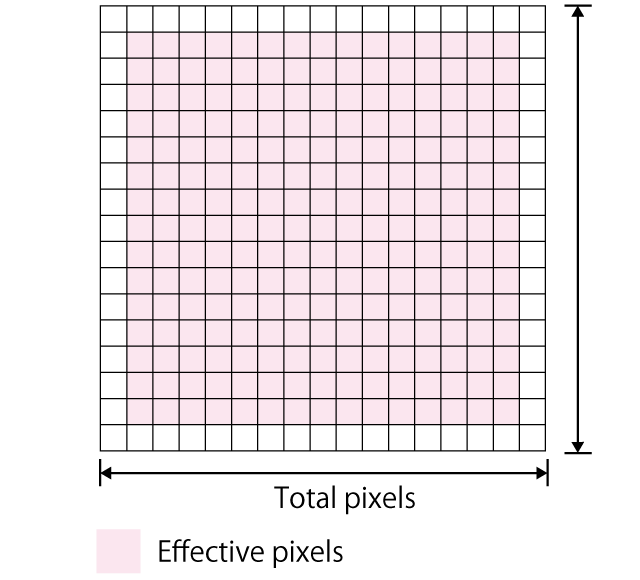

Total pixels / Effective pixels

-

Whole pixels of image sensor is "Total pixels" and pixels used for image capturing actually is "Effective pixels".

Camera performance is based on the Effective pixels. Normally, pixels close to the edge of the chip would get influence of optical noise so Effective pixels at inner area of the image sensor chip will be used actually. -

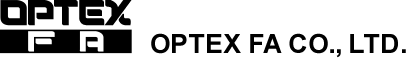

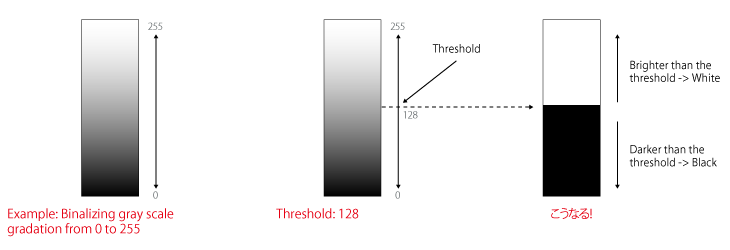

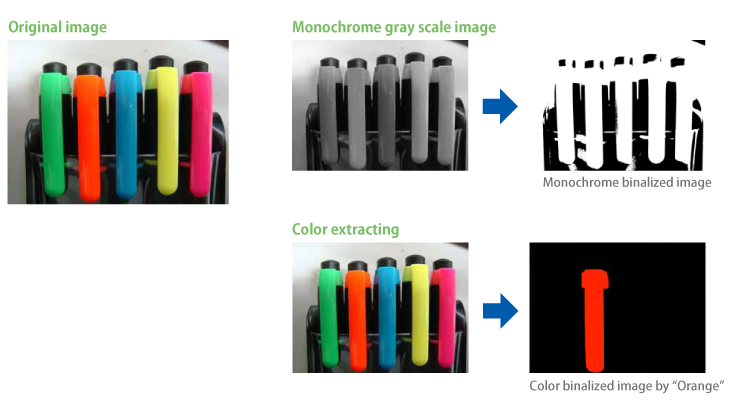

4. Image processing (binalization)

In case of monochrome picture, any color will be shown in a level from black to white (gray scale). Binalization is distinguishing colors to black or white separate by a threshold. Following example shows binarization of the gray scale (0 to 255) gradation by the threshold at the level 128.

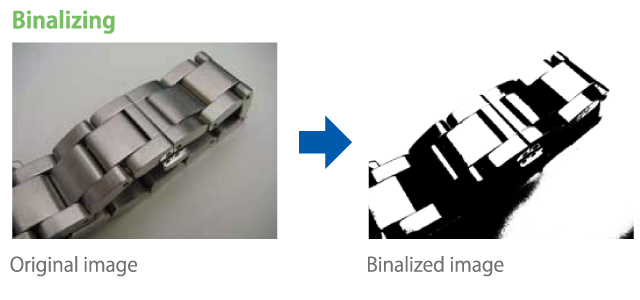

5. Image processing 2 (gray scaling)

Binalizing turns original image into 2 level image (black or white when it's monochrome binalizing) though, gray scaling turns original image into multiple level image e.g. 256, 1024, 64 level. It's used not only for monochrome image but also any other single color image. Multiple level of gradation helps machine vision to detect things more precisely.

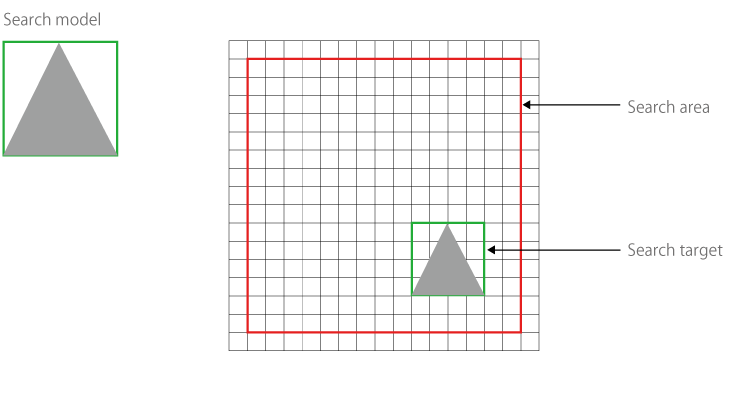

6. Image processing 3 (pattern searching)

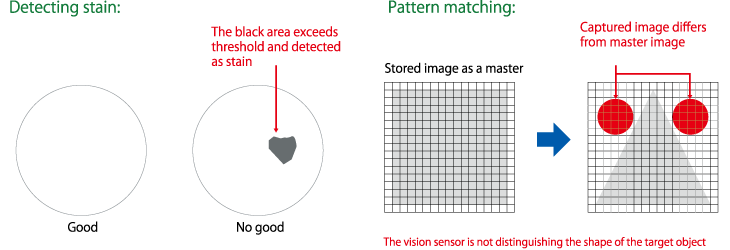

Pattern searching is searching image shape close to the stored image as search model in the captured image.

It provides correlation level, how much the image is resemblant to the search model, and coordinate of the image.

7. Glossary for vision sensors

| Term | Description | Term | Description |

|---|---|---|---|

| Resolution |

Describes the ability of a system to distinguish, detect, and/or record physical details by electromagnetic means. Normally, described by number of pixels in X and Y. |

Effective pixels | Pixels actually used effectively. Normally, edge part of image sensor chip would have noise or image on those parts would be distorted so pixels at center area will be used actually. |

| Focal length | The distance between center of the lens and the point that collimated light beams are brought to focus. The wider angle lens have the shorter focal length. Described as f25mm for example. |

Depth of field (DOF) |

Distance between the nearest and farthest objects in a scene that appear acceptably sharp in an image. Reducing the aperture size increases the depth of field. |

|

Field curvature aberrations |

The image points near the optical axis will be in perfect focus on the flat image sensor, but rays off axis will come into focus before the image sensor, this creates image distortion as curvature aberrations. |

Angle of view (AOV) |

Describes the angular extent of a given scene that is imaged by a camera. It is used interchangeably with the more general term field of view. The smaller AOV, the smaller distortion. |

|

Working distance (WD) |

The distance from the front of the lens to the target object. |

Field of view (FOV) |

The area of the inspection captured on the camera's image sensor. The size of the field of view and the size of the camera's image sensor directly affect the image resolution. |

|

Optical magnification |

The ratio of size of the FOV and the size of the image formed on the image sensor. | Binalization | Binalization is distinguishing colors to black or white separate by a threshold. It makes data size very small though, resolution of the image will be reduced. |

| Shutter time | The period that the shutter is open. It will be defined depending on the moving speed of the target object and brightness of the lighting. When the moving speed of the object is fast, shutter time should be short. In that case, lighting should be bright enough to capture bright image in that short period. |

Gray scale image processing |

Image processing using multiple gradation gray scaled data. It can keep image resolution high though, processing time will be long because data size will be big. |

| Progressive scan |

A way of displaying images in which all the lines of each frame are drawn in sequence. This is in contrast to interlaced scan. All the lines to be processed for one frame so the data size will be bigger and taking time for processing. |

Sub pixel processing |

A 10th of the pixel. Calculate brightness or color of each sub pixel from brightness or color of neighbor pixels. This enables very precise measurement. |

| Interlaced scan | A way of displaying images used in traditional analog television systems where only the odd lines, then the even lines of each frame are drawn alternately, so that only half the number of actual image frames are used to produce video. | Pattern searching |

Searching image shape close to the stored image as search model in the captured image. It provides correlation level, how much the image is resemblant to the search model, and coordinate of the image. |

| Polarizing filter |

The filter that let only one direction wave of light go through. For machine vision purpose, mainly it is used to manage reflection so that the camera can capture clear image. |

Extension tube (Extension ring) |

Ring or tube shaped spacer put between lens and camera to adjust focal length used especially when shorten the focal length. |

8. Vision sensor

Main functions of vision sensor are capturing image of the target object and check the image comparing with master image and then output the result of the inspection. It has a camera with image sensor e.g. CCD (Charge Coupled Device), CMOS (Complementary Metal Oxide Semiconductor) image sensor that provides digital image data for image processing, for example, pattern matching, measurement, OCR, etc.

9. System configuration of the vision sensor

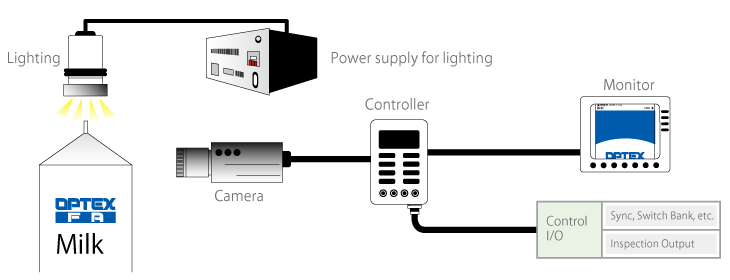

We have higher performance vision sensor which has separated camera and controller and cost efficient all-in-one type vision sensor.

General system configuration in industry

It consists of camera, controller, monitor, lighting and power supply for the lighting.

-

OPTEX FA MVS vision sensor

MVS series controller has display and power supply for LED lighting so the system will be configured with the controller, camera and LED lighting. Up to 3 cameras can be connected to a MVS controller which helps efficient installation and easy setup.

-

OPTEX FA all-in-one CVS vision sensor

CVS series vision sensor has all of camera, LED lighting, controller and display built-in so no other equipments are needed basically. System can be configured easily and cost efficiently.

10. Lens for vision sensors

Performance of the lens of CVS series

The lens is built-in the housing so it can't be replaced by user though, the structure enables IP67 as highly protective rating. Choose the model accordingly to the Working Distance and Field Of View.

| Series | CVSE1-RA, CVS1-RA, CVS2-RA, CVS3-RA | CVS4-R | ||

|---|---|---|---|---|

| Model | Working Distance | Field Of View (W * H) | Working Distance | Field Of View (W * H) |

| -N10 | 210 to 270mm | 40*50 to 55*65mm | - | - |

| -N20☐ | 90 to 150mm | 40*50 to 65*75mm | 90 to 150mm | 53*25 to 79*38mm |

| -N21☐ | 31 to 39mm | 17*20mm | 31 to 39mm | 21*10mm |

| -N23W | - | - | 44 to 56mm | 30*15mm |

| -N40☐ | 50 to 100mm | 46*55 to 82*98mm | 50 to 100mm | 53*25 to 115*53mm |

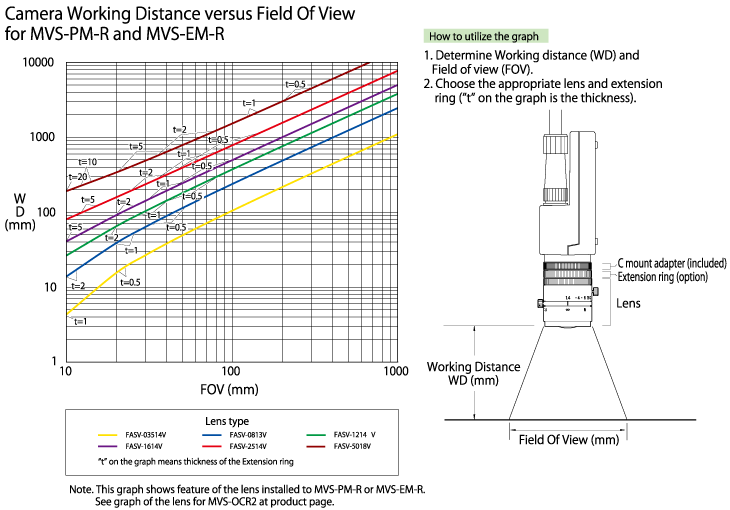

Lens for MVS series

Since the MVS series vision sensor has separated camera and controller, lens can be replaced and standard CCTV lens will be used. CCTV lenses covers from very short working distance to very long working distance. Its focus and aperture can be adjusted by it self.

Choose the lens accordingly from following graph checking the working distance and field of view.

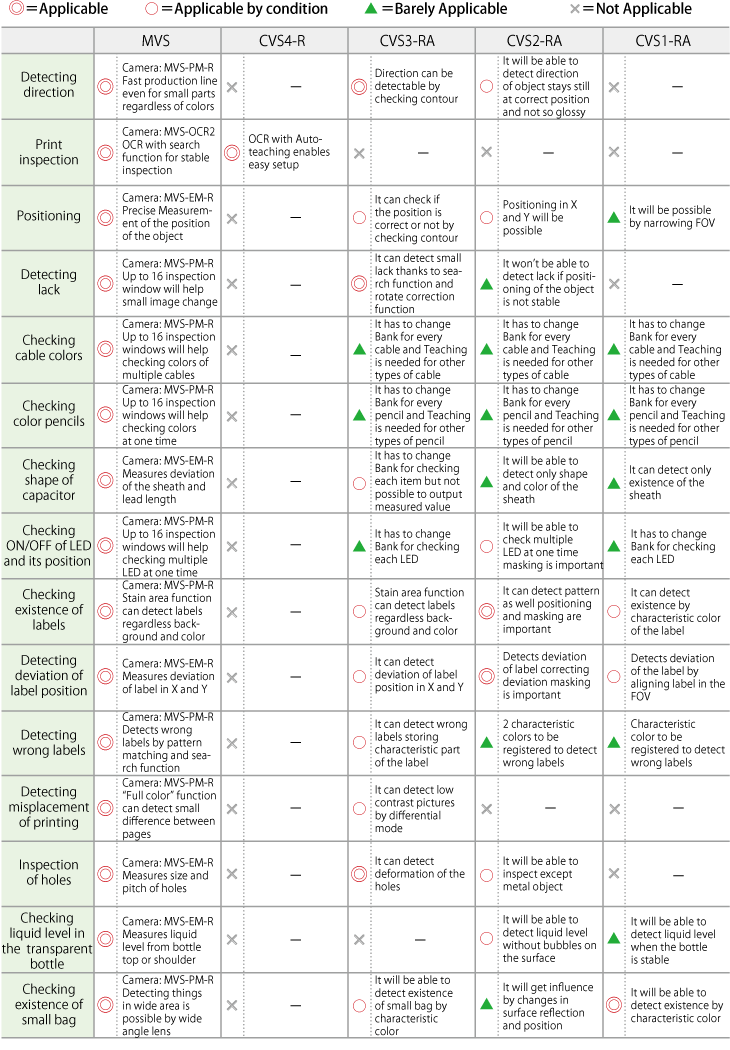

11. Applicable series by application

12. Calculation of shutter time

The time the vision sensor can use for image processing and defining result is:

Distance between objects : 100mm / Line speed : 100mm/sec = 1 sec.

1 second is more than enough for image processing and defining result.

The time can be allowed the image captured moves while shutter is open:

1% of width of the detection area : 20mm/100 / Line speed : 100mm/sec. = 2 m sec.

2 m sec. is quite short period so lighting must be bright enough to capture clear image in this case.

13. Example of objects that are hard to detect

1) Detecting difference between objects have light color

It is so hard to detect especially light green, white and light gray.

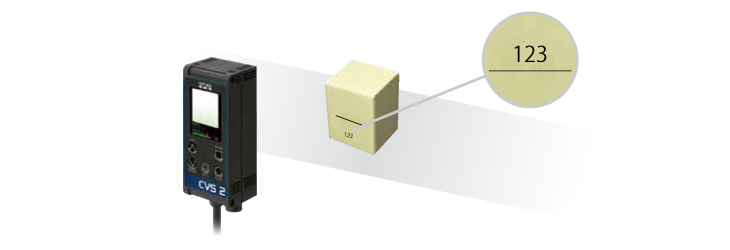

2) Small characters, thin line

When the line is close to a size of pixel (= width of FOV/pixel number), the camera won't be able to detect it as a line. For example, when width of FOV is 50mm and number of pixels in horizon is 200, size of the picture per pixel is 0.25mm.

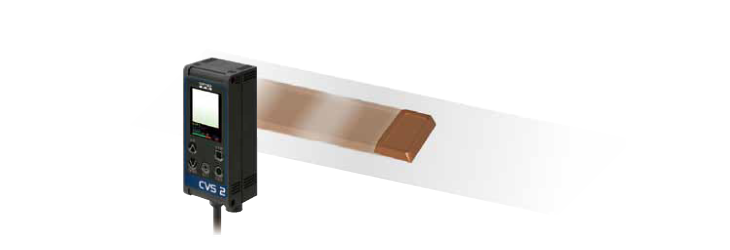

3) Objects move fast

When the objects move fast and shutter time is long, the captured image will be blur and not sharp.

In that case, shutter time must be short and lighting must be bright enough so that the camera can capture the image clearly in such short shutter time.

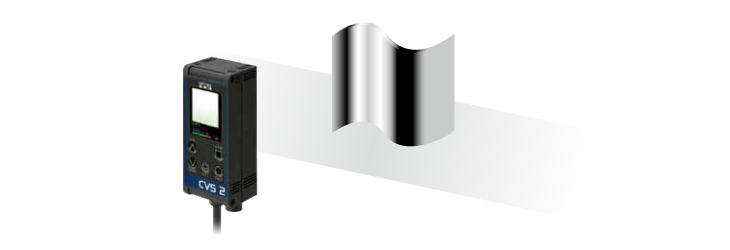

4) Objects with glossy and uneven surface

It will be very hard to capture clear image when object is glossy film, transparent bottle, metal can (pipe) that have glossy uneven surface that would be reflective and reflection changes easily.

Diffuse lighting and polarizing filter would help capturing image clearly in some case.

Note: When some of these negative conditions are on the application, degree of difficulty would increase.